AI tools for data preprocessing

Related Tools:

DVC

DVC is an open-source platform for managing machine learning data and experiments. It provides a unified interface for working with data from various sources, including local files, cloud storage, and databases. DVC also includes tools for versioning data and experiments, tracking metrics, and automating compute resources. DVC is designed to make it easy for data scientists and machine learning engineers to collaborate on projects and share their work with others.

ML Clever

ML Clever is a no-code machine learning platform that empowers users to build powerful ML models with one click, explore what-if scenarios to guide decisions, and create interactive dashboards to explain results. It combines automated machine learning, interactive dashboards, and flexible prediction tools in one platform, allowing users to transform data into business insights without the need for data scientists or coding skills.

Plat.AI

Plat.AI is an automated predictive analytics software that offers model building solutions for various industries such as finance, insurance, and marketing. It provides a real-time decision-making engine that allows users to build and maintain AI models without any coding experience. The platform offers features like automated model building, data preprocessing tools, codeless modeling, and personalized approach to data analysis. Plat.AI aims to make predictive analytics easy and accessible for users of all experience levels, ensuring transparency, security, and compliance in decision-making processes.

HappyML

HappyML is an AI tool designed to assist users in machine learning tasks. It provides a user-friendly interface for running machine learning algorithms without the need for complex coding. With HappyML, users can easily build, train, and deploy machine learning models for various applications. The tool offers a range of features such as data preprocessing, model evaluation, hyperparameter tuning, and model deployment. HappyML simplifies the machine learning process, making it accessible to users with varying levels of expertise.

ACHIV

ACHIV is an AI tool for ideas validation and market research. It helps businesses make informed decisions based on real market needs by providing data-driven insights. The tool streamlines the market validation process, allowing quick adaptation and refinement of product development strategies. ACHIV offers a revolutionary approach to data collection and preprocessing, along with proprietary AI models for smart analysis and predictive forecasting. It is designed to assist entrepreneurs in understanding market gaps, exploring competitors, and enhancing investment decisions with real-time data.

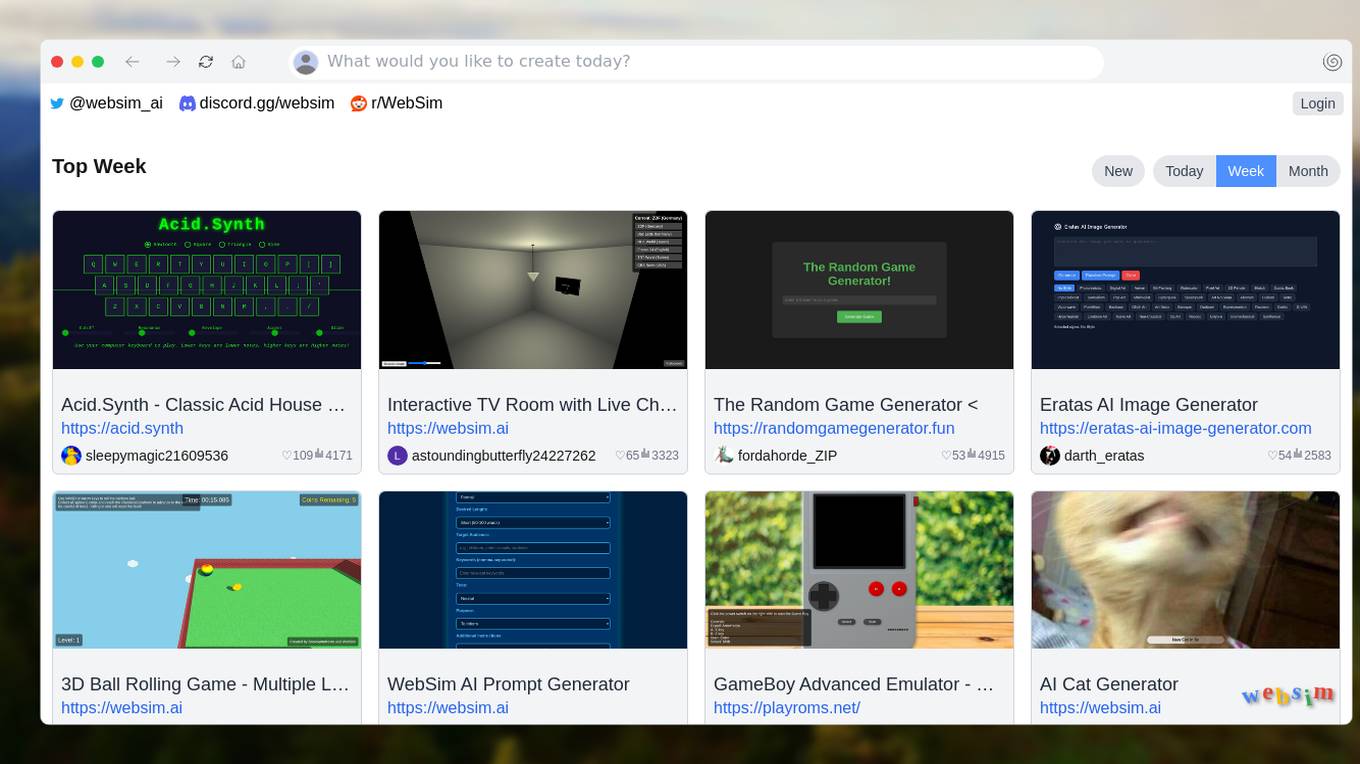

Websim.ai

Websim.ai is an advanced AI tool designed to provide users with a powerful platform for simulating and analyzing web data. With cutting-edge algorithms and machine learning capabilities, Websim.ai offers a comprehensive suite of tools for web data analysis, visualization, and prediction. Users can easily upload their data sets, perform complex analyses, and generate insightful reports to gain valuable insights into their web performance and user behavior. Whether you are a data scientist, marketer, or business owner, Websim.ai empowers you to make informed decisions and optimize your online presence.

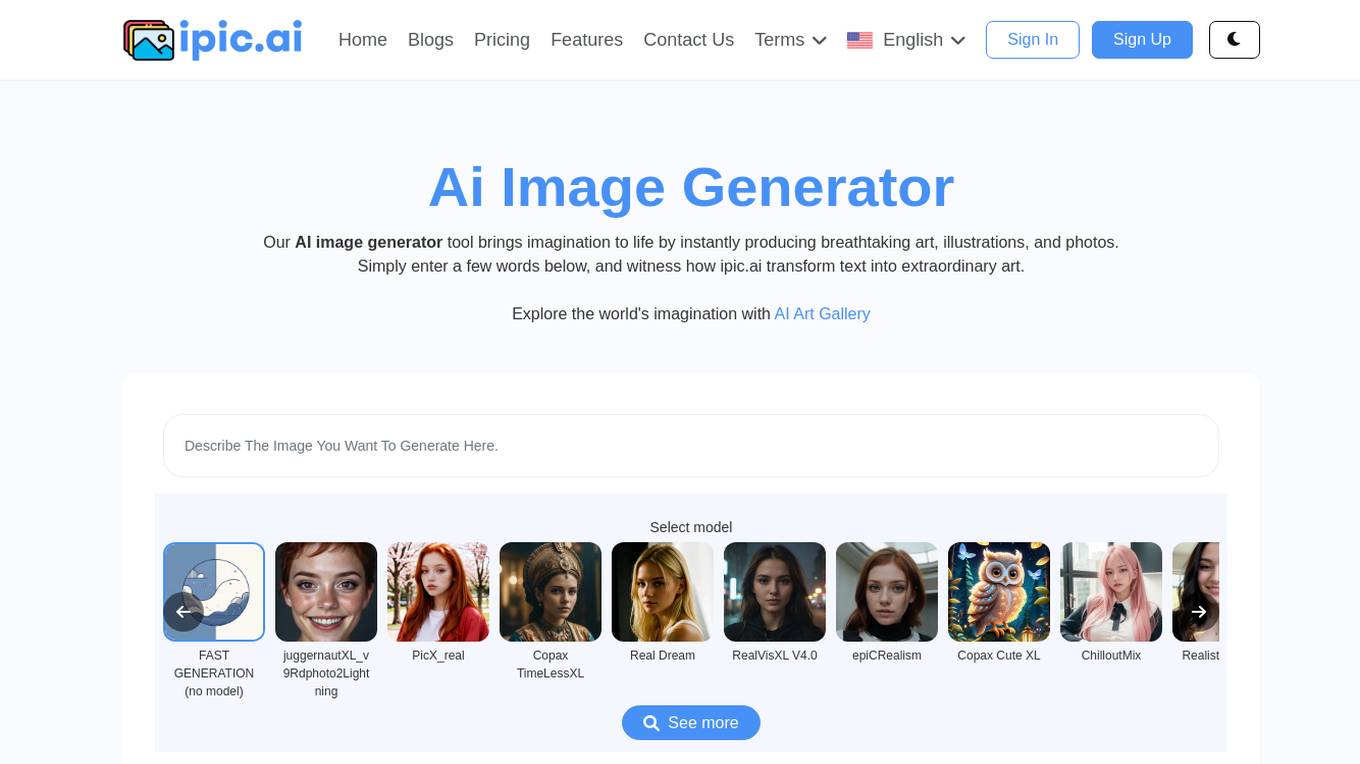

iPic.Ai

iPic.Ai is an AI-powered image generator tool that brings imagination to life by instantly producing breathtaking art, illustrations, and photos. Users can transform text into extraordinary art by entering a few words and exploring the world's imagination with the AI Art Gallery. The platform offers a variety of models for fast generation and allows users to generate high-quality and unique images for various purposes such as marketing campaigns, website banners, or social media content. iPic.Ai utilizes deep learning techniques like generative adversarial networks (GANs) to create realistic and coherent images from scratch, catering to diverse applications in entertainment, design, advertising, and medical research.

Eigen Technologies

Eigen Technologies is an AI-powered data extraction platform designed for business users to automate the extraction of data from various documents. The platform offers solutions for intelligent document processing and automation, enabling users to streamline business processes, make informed decisions, and achieve significant efficiency gains. Eigen's platform is purpose-built to deliver real ROI by reducing manual processes, improving data accuracy, and accelerating decision-making across industries such as corporates, banks, financial services, insurance, law, and manufacturing. With features like generative insights, table extraction, pre-processing hub, and model governance, Eigen empowers users to automate data extraction workflows efficiently. The platform is known for its unmatched accuracy, speed, and capability, providing customers with a flexible and scalable solution that integrates seamlessly with existing systems.

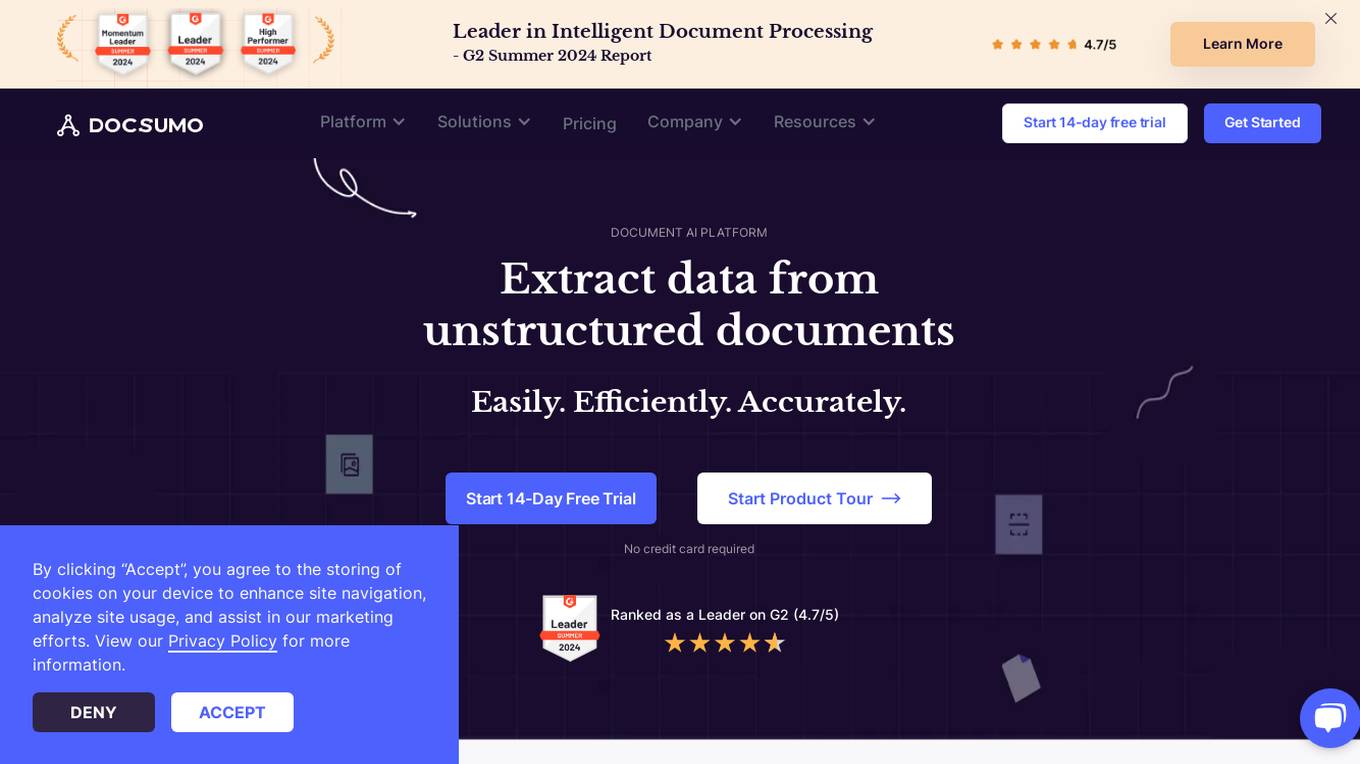

Docsumo

Docsumo is an advanced Document AI platform designed for scalability and efficiency. It offers a wide range of capabilities such as pre-processing documents, extracting data, reviewing and analyzing documents. The platform provides features like document classification, touchless processing, ready-to-use AI models, auto-split functionality, and smart table extraction. Docsumo is a leader in intelligent document processing and is trusted by various industries for its accurate data extraction capabilities. The platform enables enterprises to digitize their document processing workflows, reduce manual efforts, and maximize data accuracy through its AI-powered solutions.

ESTsoft

ESTsoft is a South Korean software company that develops and markets a wide range of software products and services, including operating systems, database management systems, and application software. The company was founded in 1995 and is headquartered in Seoul, South Korea. ESTsoft's mission is to "make the world more convenient and safer through AI." The company's products and services are used by a wide range of customers, including governments, businesses, and individuals. ESTsoft is a publicly traded company and is listed on the Korea Exchange. The company has a strong commitment to research and development and invests heavily in new technologies. ESTsoft has a number of partnerships with other companies, including Microsoft, IBM, and Oracle. The company is also a member of the World Economic Forum.

Compact Data Science

Compact Data Science is a data science platform that provides a comprehensive set of tools and resources for data scientists and analysts. The platform includes a variety of features such as data preparation, data visualization, machine learning, and predictive analytics. Compact Data Science is designed to be easy to use and accessible to users of all skill levels.

dataset.macgence

dataset.macgence is an AI-powered data analysis tool that helps users extract valuable insights from their datasets. It offers a user-friendly interface for uploading, cleaning, and analyzing data, making it suitable for both beginners and experienced data analysts. With advanced algorithms and visualization capabilities, dataset.macgence enables users to uncover patterns, trends, and correlations in their data, leading to informed decision-making. Whether you're a business professional, researcher, or student, dataset.macgence can streamline your data analysis process and enhance your data-driven strategies.

Kenniscentrum Data & Maatschappij

Kenniscentrum Data & Maatschappij is a website dedicated to legal, ethical, and societal aspects of artificial intelligence and data applications. It provides insights, guidelines, and practical tools for individuals and organizations interested in AI governance and innovation. The platform offers resources such as policy documents, training programs, and collaboration cards to facilitate human-AI interaction and promote responsible AI use.

Legal Data

Legal Data is a comprehensive legal research platform developed by lawyers for lawyers. It offers a powerful search feature that covers various legal areas from commercial to criminal law. The platform recognizes synonyms, legalese, and abbreviations, corrects typos, and provides suggestions as you type. Additionally, Legal Data includes an AI-assistant called FlyBot, trained on carefully selected laws and cases, to provide accurate legal answers without fabricating information.

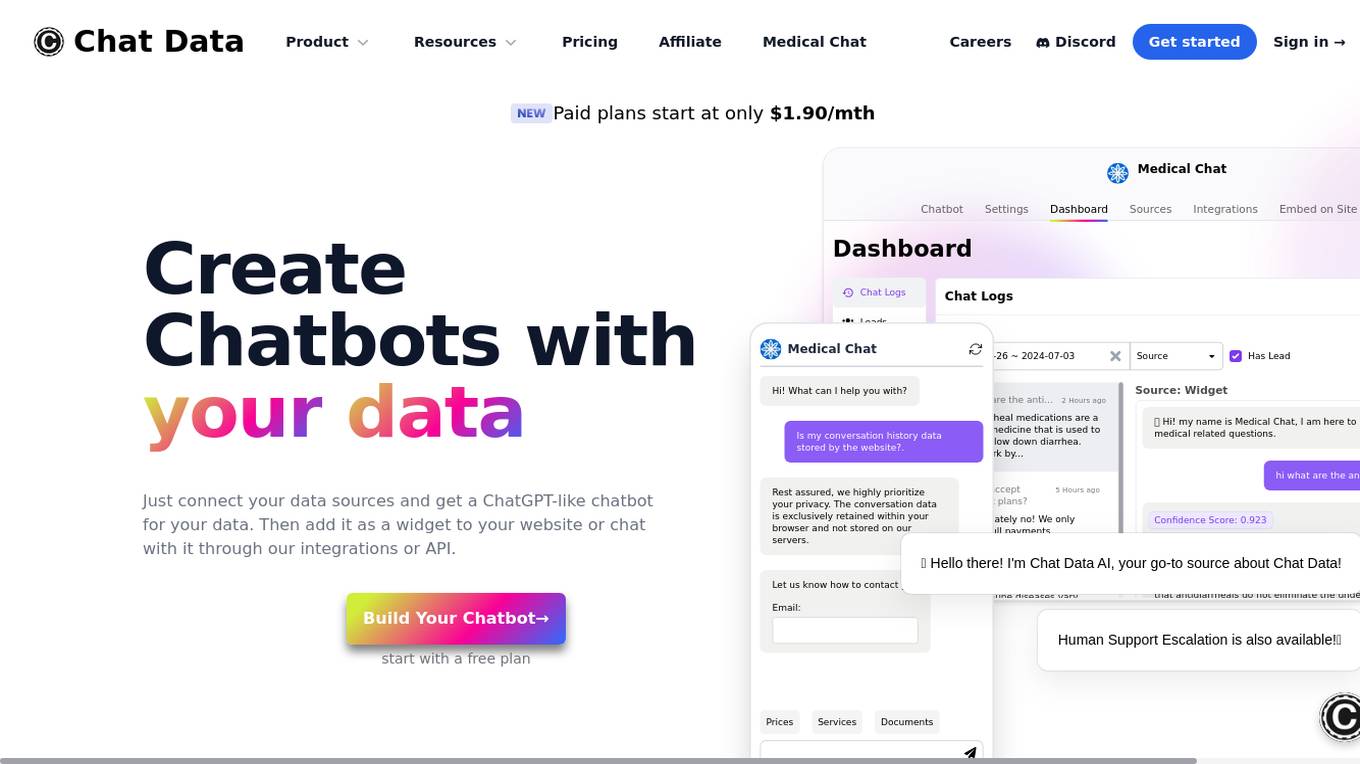

Chat Data

Chat Data is an AI application that allows users to create custom chatbots using their own data sources. Users can easily build and integrate chatbots with their websites or other platforms, personalize the chatbot's interface, and access advanced features like human support escalation and product updates synchronization. The platform offers HIPAA-compliant medical chat models and ensures data privacy by retaining conversation data exclusively within the user's browser. With Chat Data, users can enhance customer interactions, gather insights, and streamline communication processes.

Medical Chat

Medical Chat is an advanced AI assistant designed for healthcare professionals, providing instant and accurate medical answers for both human and veterinary medicine. Its capabilities include diagnosing medical conditions, generating differential diagnosis reports, creating patient-specific clinic plans, and offering comprehensive drug information. Medical Chat utilizes the latest LLM models, including ChatGPT 3.5 and 4.0, to deliver reliable and up-to-date medical knowledge. The platform also features a vast database of professional medical textbooks, veterinary books, and PubMed articles, ensuring evidence-based responses. With its HIPAA compliance and commitment to data privacy, Medical Chat empowers healthcare providers to enhance their diagnostic capabilities and improve patient outcomes.

Fullpath

Fullpath is an AI-powered Customer Data Platform (CDP) designed specifically for auto dealerships. It helps dealers organize and activate their data to create hyper-personalized customer experiences, optimize marketing campaigns, and improve sales performance. Fullpath offers features such as Data Management, Campaign Management, Data Activation, Digital Advertising, Website Engagement, VIN-Specific Ad Campaigns, Equity-Based Campaigns, and more. The platform leverages AI technology to provide deep audience segmentation, identity resolution, and powerful automations for targeted marketing. Fullpath aims to revolutionize automotive retail by integrating AI into dealership operations and enhancing customer engagement.

Isomeric

Isomeric is an AI tool that uses artificial intelligence to semantically understand unstructured text and extract specific data. It helps transform messy text into machine-readable JSON, enabling tasks such as web scraping, browser extensions, and general information extraction. With Isomeric, users can scale their data gathering pipeline in seconds, making it a valuable tool for various industries like customer support, data platforms, and legal services.

GetOData

GetOData is an AI-based data extraction tool designed for small-scale scraping. It allows users to discover and compare over 4,000 APIs for various use cases. The tool offers Apify Actors for extracting structured listings from any website and a Chrome Extension for seamless data extraction. With features like AI-based data extraction, side-by-side API comparisons, and automated scrolling for data collection, GetOData is a powerful tool for web scraping and data analysis.

Optimisateur de Performance GPT

Expert en optimisation de performance et traitement de données

Your Business Data Optimizer Pro

A chatbot expert in business data analysis and optimization.

Data Dynamo

A friendly data science coach offering practical, useful, and accurate advice.

Dr. Classify

Just upload a numerical dataset for classification task, will apply data analysis and machine learning steps to make a best model possible.

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Clinical Impact and Finance Guru

Expert in healthcare data analysis, coding, and clinical trials.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

BCorpGPT

Query BCorp company data. All data is publicly available. United Kingdom only (for now).

Alas Data Analytics Student Mentor

Salam mən Alas Academy-nin Data Analitika üzrə Süni İntellekt mentoruyam. Mənə istənilən sualı verə bilərsiniz :)

Therocial Scientist

I am a digital scientist skilled in Python, here to assist with scientific and data analysis tasks.

data-prep-kit

Data Prep Kit is a community project aimed at democratizing and speeding up unstructured data preparation for LLM app developers. It provides high-level APIs and modules for transforming data (code, language, speech, visual) to optimize LLM performance across different use cases. The toolkit supports Python, Ray, Spark, and Kubeflow Pipelines runtimes, offering scalability from laptop to datacenter-scale processing. Developers can contribute new custom modules and leverage the data processing library for building data pipelines. Automation features include workflow automation with Kubeflow Pipelines for transform execution.

data-prep-kit

Data Prep Kit accelerates unstructured data preparation for LLM app developers. It allows developers to cleanse, transform, and enrich unstructured data for pre-training, fine-tuning, instruct-tuning LLMs, or building RAG applications. The kit provides modules for Python, Ray, and Spark runtimes, supporting Natural Language and Code data modalities. It offers a framework for custom transforms and uses Kubeflow Pipelines for workflow automation. Users can install the kit via PyPi and access a variety of transforms for data processing pipelines.

Auto-Analyst

Auto-Analyst is an AI-driven data analytics agentic system designed to simplify and enhance the data science process. By integrating various specialized AI agents, this tool aims to make complex data analysis tasks more accessible and efficient for data analysts and scientists. Auto-Analyst provides a streamlined approach to data preprocessing, statistical analysis, machine learning, and visualization, all within an interactive Streamlit interface. It offers plug and play Streamlit UI, agents with data science speciality, complete automation, LLM agnostic operation, and is built using lightweight frameworks.

DB-GPT-Hub

DB-GPT-Hub is an experimental project leveraging Large Language Models (LLMs) for Text-to-SQL parsing. It includes stages like data collection, preprocessing, model selection, construction, and fine-tuning of model weights. The project aims to enhance Text-to-SQL capabilities, reduce model training costs, and enable developers to contribute to improving Text-to-SQL accuracy. The ultimate goal is to achieve automated question-answering based on databases, allowing users to execute complex database queries using natural language descriptions. The project has successfully integrated multiple large models and established a comprehensive workflow for data processing, SFT model training, prediction output, and evaluation.

LongRecipe

LongRecipe is a tool designed for efficient long context generalization in large language models. It provides a recipe for extending the context window of language models while maintaining their original capabilities. The tool includes data preprocessing steps, model training stages, and a process for merging fine-tuned models to enhance foundational capabilities. Users can follow the provided commands and scripts to preprocess data, train models in multiple stages, and merge models effectively.

automatic-KG-creation-with-LLM

This repository presents a (semi-)automatic pipeline for Ontology and Knowledge Graph Construction using Large Language Models (LLMs) such as Mixtral 8x22B Instruct v0.1, GPT-4o, GPT-3.5, and Gemini. It explores the generation of Knowledge Graphs by formulating competency questions, developing ontologies, constructing KGs, and evaluating the results with minimal human involvement. The project showcases the creation of a KG on deep learning methodologies from scholarly publications. It includes components for data preprocessing, prompts for LLMs, datasets, and results from the selected LLMs.

driverlessai-recipes

This repository contains custom recipes for H2O Driverless AI, which is an Automatic Machine Learning platform for the Enterprise. Custom recipes are Python code snippets that can be uploaded into Driverless AI at runtime to automate feature engineering, model building, visualization, and interpretability. Users can gain control over the optimization choices made by Driverless AI by providing their own custom recipes. The repository includes recipes for various tasks such as data manipulation, data preprocessing, feature selection, data augmentation, model building, scoring, and more. Best practices for creating and using recipes are also provided, including security considerations, performance tips, and safety measures.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

AIGC_text_detector

AIGC_text_detector is a repository containing the official codes for the paper 'Multiscale Positive-Unlabeled Detection of AI-Generated Texts'. It includes detector models for both English and Chinese texts, along with stronger detectors developed with enhanced training strategies. The repository provides links to download the detector models, datasets, and necessary preprocessing tools. Users can train RoBERTa and BERT models on the HC3-English dataset using the provided scripts.

DL-Hub

DL-Hub is a deep learning repository containing various study materials and code projects in the fields of machine learning, deep learning, computer vision, natural language processing, and web crawling. It includes paper analysis, deep learning projects, graph neural network replications, machine learning algorithms, transformer models, and optimization implementations. The repository aims to provide valuable resources for learning and research in the deep learning and machine learning domains.

LLMRec

LLMRec is a PyTorch implementation for the WSDM 2024 paper 'Large Language Models with Graph Augmentation for Recommendation'. It is a novel framework that enhances recommenders by applying LLM-based graph augmentation strategies to recommendation systems. The tool aims to make the most of content within online platforms to augment interaction graphs by reinforcing u-i interactive edges, enhancing item node attributes, and conducting user node profiling from a natural language perspective.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

imodels

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. _For interpretability in NLP, check out our new package:imodelsX _

100days_AI

The 100 Days in AI repository provides a comprehensive roadmap for individuals to learn Artificial Intelligence over a period of 100 days. It covers topics ranging from basic programming in Python to advanced concepts in AI, including machine learning, deep learning, and specialized AI topics. The repository includes daily tasks, resources, and exercises to ensure a structured learning experience. By following this roadmap, users can gain a solid understanding of AI and be prepared to work on real-world AI projects.

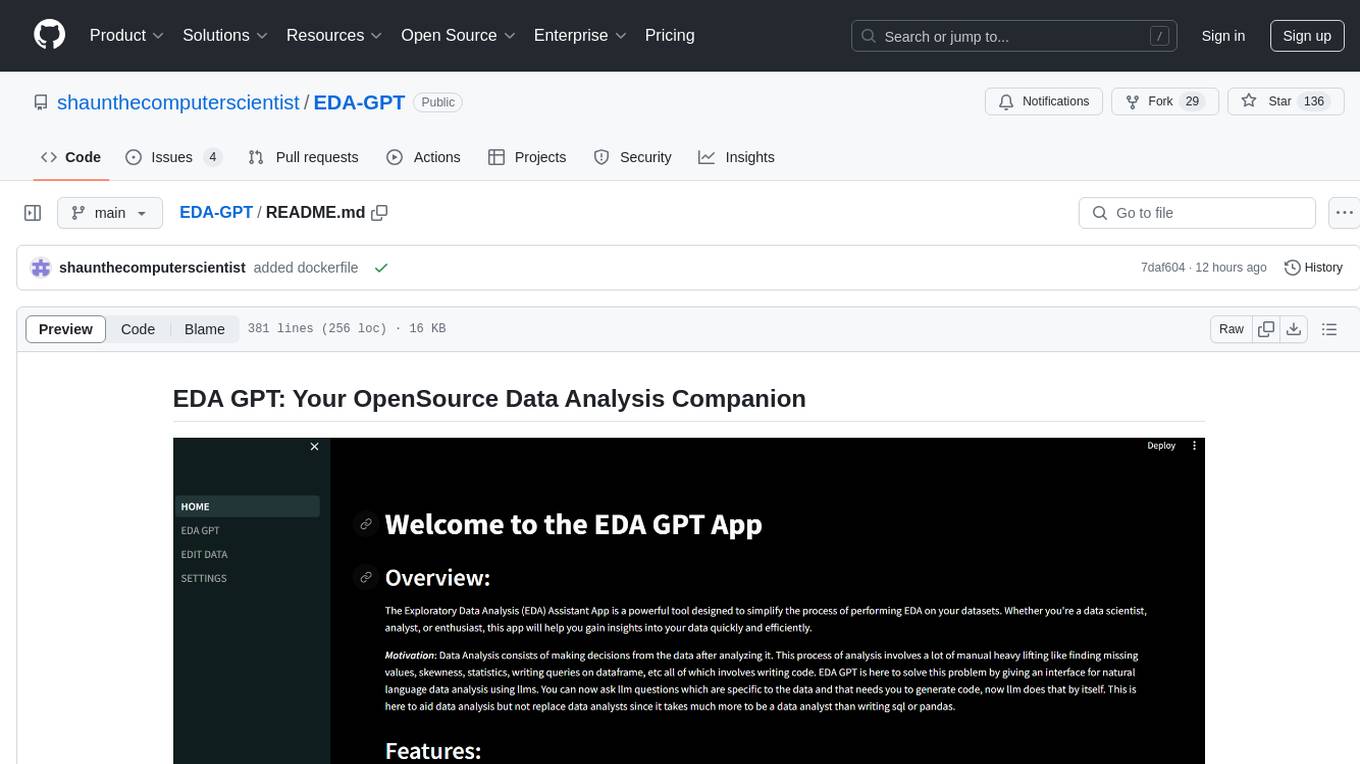

EDA-GPT

EDA GPT is an open-source data analysis companion that offers a comprehensive solution for structured and unstructured data analysis. It streamlines the data analysis process, empowering users to explore, visualize, and gain insights from their data. EDA GPT supports analyzing structured data in various formats like CSV, XLSX, and SQLite, generating graphs, and conducting in-depth analysis of unstructured data such as PDFs and images. It provides a user-friendly interface, powerful features, and capabilities like comparing performance with other tools, analyzing large language models, multimodal search, data cleaning, and editing. The tool is optimized for maximal parallel processing, searching internet and documents, and creating analysis reports from structured and unstructured data.

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models.

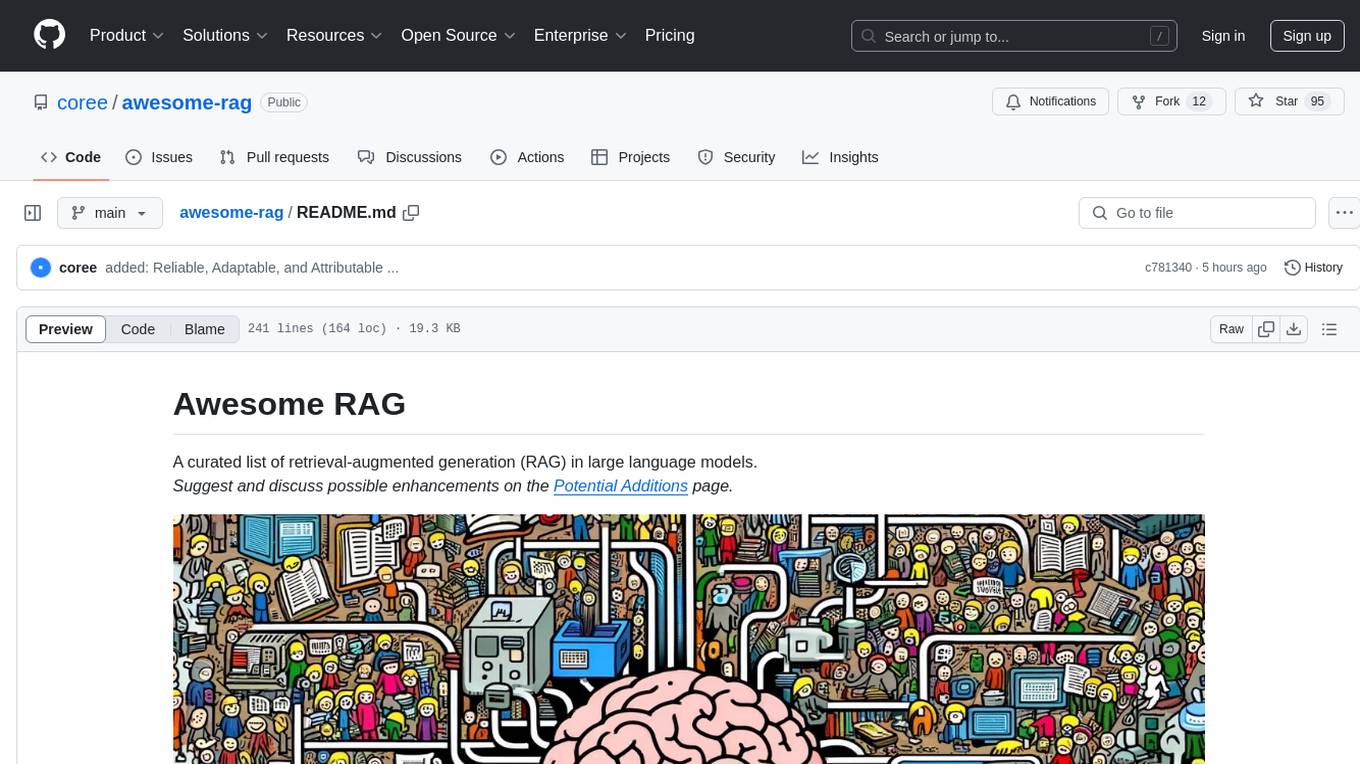

awesome-rag

Awesome RAG is a curated list of retrieval-augmented generation (RAG) in large language models. It includes papers, surveys, general resources, lectures, talks, tutorials, workshops, tools, and other collections related to retrieval-augmented generation. The repository aims to provide a comprehensive overview of the latest advancements, techniques, and applications in the field of RAG.

F1 Analytics

Bot expert in F1 data analysis and race insights.